Physically-based Sound Modeling

When trying to develop a taxonomy of sound synthesis methods a first distinction can be traced between signal models and physically-based models. Any algorithm which is based on a description of the sound pressure signal and makes no assumptions on the generation mechanisms belongs to the class of signal models. On the other hand, in physically-based models the sound synthesis algorithms are obtained by simulating objects and physical interactions that are responsible for the sound generation. The goal is to develop sound synthesis algorithms which are suitable to be embedded in interactive systems with audio-visual of audio-haptic display, and gestural control. Recent research has focused on simulation of musical instruments and more recently on the development of interactive sound models for auditory feedback in multimodal interfaces, in particular contact sounds (impacts, friction, etc.).

Contact sound modeling

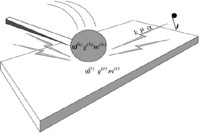

Contact sounds are a class of everyday sounds, that are produced by the impact of solid bodies. When a solid object engages in some external interactions (e.g. it is struck, scraped, and so on), the forces at the contact point cause deformations to propagate through the body, and consequently its surfaces to vibrate and emit sound waves. A physically-motivated model for the simulation of vibrating objects is modal synthesis, which describes the object as bank of second order damped mechanical oscillators (the normal modes) excited by the interaction force. The frequencies and dampings of the oscillators depend on the geometry and the material of the object and the amount of energy transferred to each mode depends on the location of the force applied to the object. Under general hypothesis, and with appropriate boundary conditions, linear partial differential equations describing a vibrating system admit solutions described as superposition of vibration modes. In this sense modal synthesis is physically well motivated and widely applicable. We have developed a physically-based sound synthesis model of interacting objects, simulated through a modal description. The objects can be coupled through non-linear interaction forces that describe impulsive and continuous contact. Real-time implementations of the models have been realized as plugins to the open source real-time synthesis environment Pure Data.

In the following examples a modal mechanical resonator is excited by non-linear impact and stick-slip friction models. The number of partials, the frequencies, and the quality factors of the resonator can be controlled, as well as parameters related to the interaction force.

Contact sounds are a class of everyday sounds, that are produced by the impact of solid bodies. When a solid object engages in some external interactions (e.g. it is struck, scraped, and so on), the forces at the contact point cause deformations to propagate through the body, and consequently its surfaces to vibrate and emit sound waves. A physically-motivated model for the simulation of vibrating objects is modal synthesis, which describes the object as bank of second order damped mechanical oscillators (the normal modes) excited by the interaction force. The frequencies and dampings of the oscillators depend on the geometry and the material of the object and the amount of energy transferred to each mode depends on the location of the force applied to the object. Under general hypothesis, and with appropriate boundary conditions, linear partial differential equations describing a vibrating system admit solutions described as superposition of vibration modes. In this sense modal synthesis is physically well motivated and widely applicable. We have developed a physically-based sound synthesis model of interacting objects, simulated through a modal description. The objects can be coupled through non-linear interaction forces that describe impulsive and continuous contact. Real-time implementations of the models have been realized as plugins to the open source real-time synthesis environment Pure Data.

In the following examples a modal mechanical resonator is excited by non-linear impact and stick-slip friction models. The number of partials, the frequencies, and the quality factors of the resonator can be controlled, as well as parameters related to the interaction force.

- Double impact You can hear two impact sounds, the first synthesized using n=1 partials for the resonator, the second using n=3 partials. All the other parameters (hammer velocity, force parameters, etc.) have the same values in the two sounds. Note that using only three partials produces veridical results.

- Wood-like material and Glass-like material The decay time of the resonator is adjusted in such a way that the perceived material of the resonator can be controlled. Listening tests have velidated the model. The model can therefore synthesize cartoon sounds, to be used as auditory icons in human-computer interfaces.

- Varying mass and Varying stiffness The contact time (i.e., the time after which the hammer separates from the resonator) can be controlled using the physical parameters of the contact force. The contact time is in turn related to the perceived hammer hardness. In the first example the hammer mass is increased throughout the sample; correspondingly, the contact time increases and the hammer hardness decreases (you can hear the initial bump becoming more and more audible). In the second example the force stiffness is increased throughout the sample; correspondingly, the contact time decreases and the hammer hardness increases (you can hear the attack becoming brighter and brighter).

- Braking, rubbing, and squeaking Acoustic simulation of friction is a particularly challenging task, because continuous (strong) contact conditions require a tight and veridical integration of the synthesis layer with the control input. We have combined a recently proposed physical models of friction with the lumped modal description of resonating bodies, which is successful in reproducing several salient everyday sound phenomena.

Top of page

Real-time modeling of musical instruments

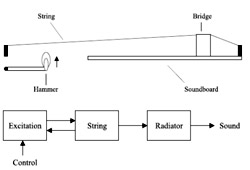

The piano is a particularly interesting instrument, both for its prominence in western music and for the complexity of its functioning. One advantage is that its control is simple (basically it reduces to key velocity), and physical control devices (MIDI keyboards) are widely available, which is not the case for other instruments.

As part of a collaboration between the University of Padova and Generalmusic, physical model of both electric pianos and the acoustic piano were developed already in 1997, which did run in real-time with no special hardware.

The piano is a particularly interesting instrument, both for its prominence in western music and for the complexity of its functioning. One advantage is that its control is simple (basically it reduces to key velocity), and physical control devices (MIDI keyboards) are widely available, which is not the case for other instruments.

As part of a collaboration between the University of Padova and Generalmusic, physical model of both electric pianos and the acoustic piano were developed already in 1997, which did run in real-time with no special hardware.

From this "historical" (1997) example, the Polonaise op. 53 by Frederic Chopin, one could hear that sound quality of the acoustic piano model was not fully satisfactory, due to the lack of an accurate soundboard model. Still, professional musicians who have played the model reported that the instrument reacted in a realistic way to changes in touch and dynamics, and appeared as less synthetic that usual wavetable instruments.

Single reed are another interesting case. In our work the reed is modeled as a one-mass system with constant parameters, coupled to non-linear equations describing pressure and flow within the mouthpiece. The following examples show that accurate numerical realizations of this model allow for simulation of realistic effects (the system is discretized using the 1-step Weighted Sample method).

- Fundamental register. The overall sound quality is not satisfactory; this is mainly due to poor modeling of the bore (a single waveguide section with a low-pass filter modeling the bell termination) and can be noticed during steady state oscillations. On the other hand, the accurate modeling of the excitation mechanism provides a realistic attack transient.

- Second (clarion) register, played without any register hole, by properly adjusting the reed parameters. Both the resonance and the damping coefficient are lowered. The transition to the clarion register can be heard in the attack transient, and this behavior is qualitatively in agreement with experimental results on real clarinets.

- Reed regime ("squeaks"), obtained by giving the damping coefficient a very low value. This behavior is qualitatively in agreement with experimental results. A similar effect can be produced on a real clarinet if the player presses the reed using his teeth instead of his lip, therefore providing little damping.

We have also studied a non-linear reed oscillator that accounts properly for reed curling onto the mouthpiece and reed beating. In these examples (courtesy of Maarten van Walstijn) the mouth pressure is linearly increased and then quickly decreased back

- Constant lumped parameters. You can hear the point when the reed suddenly enters the beating regime. The pitch is almost constant throughout the sample, it does not change significantly with increasing mouth pressure.

- Non-constant lumped parameters. The transition to the beating regime is much smoother than above, you can hear that the spectrum gradually opens up with increasing blowing pressure. There is an audible increase in the pitch, which is qualitatively in accordance with experimental results on real clarinets.

Physically-based synthesis of bell sound

The group has a research activity in collaboration with the Department of Mechanical Engineering (University of Padua) for the "Probell-Protection and Maintenance of Bells" European Research Project. In this field, the main task of the group is the development of an analysis/synthesis software for the evaluation of acoustic and musical quality of bells. Sound synthesis is based on physical models for the bell, the clapper and the impact interaction. FEA simulations provided by the Department of Mechanical Engineering are used for the extraction of natural vibrational frequencies and dynamical response of the bell; using these parameters we can therefore achieve a sound synthesis of the virtual bell starting from its profile before the actual founding of the real bell.

Moreover, several sound recordings and analysis were performed on bells provided by european bellfounders in order to extract the different features (spectrum profile, damping coefficients, modal weights).

The group has a research activity in collaboration with the Department of Mechanical Engineering (University of Padua) for the "Probell-Protection and Maintenance of Bells" European Research Project. In this field, the main task of the group is the development of an analysis/synthesis software for the evaluation of acoustic and musical quality of bells. Sound synthesis is based on physical models for the bell, the clapper and the impact interaction. FEA simulations provided by the Department of Mechanical Engineering are used for the extraction of natural vibrational frequencies and dynamical response of the bell; using these parameters we can therefore achieve a sound synthesis of the virtual bell starting from its profile before the actual founding of the real bell.

Moreover, several sound recordings and analysis were performed on bells provided by european bellfounders in order to extract the different features (spectrum profile, damping coefficients, modal weights).

Top of page

Research Threads

3D Audio

Audio in multimodal interfaces

Audio restoration

Interactive environments for learning

Music expression modeling

Physically-based sound modeling

Virtual rehabilitation

History of CSC research

Sub-Threads

Physically-based sound modeling

Contact sound modeling

Real-time modeling of musical instruments

Synthesis of bell sound

Projects

A complete list of projects and industrial partners can be found here.